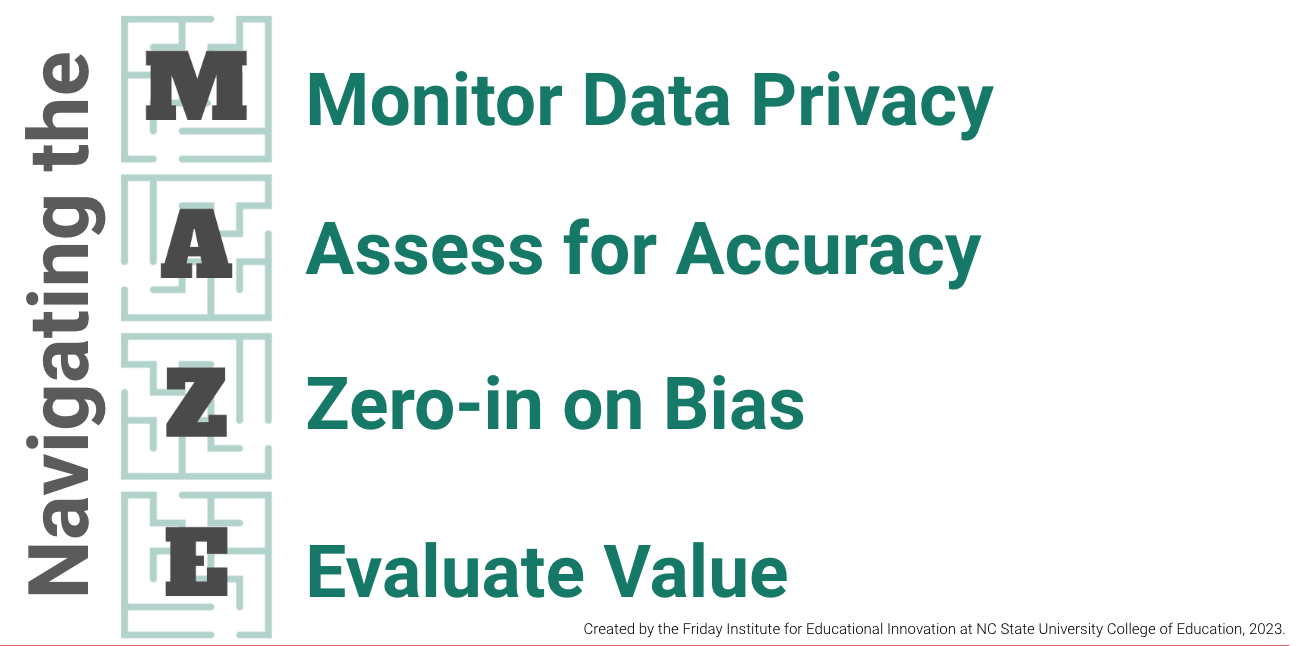

Navigate the MAZE to Select Safe and Effective AI Tools for the Classroom

Shared under CC BY-NC-SA 4.0

As more and more digital tools incorporate artificial intelligence (AI), this 4-step approach to Navigating the MAZE can help educators to evaluate and select safe and effective online platforms. Informed by The White House’s Blueprint for an AI Bill of Rights, the M.A.Z.E. acronym developed by the Friday Institute for Educational Innovation at North Carolina State University offers a K-12 interpretation for an easy-to-remember process for choosing the right AI tool for the education setting.

Each component provides further description, key questions to ask and steps an educator can take to put this component into action.

M: Monitor Data Privacy

The digital platform provides transparent and accessible explanations for their data practices (including what data is collected, how the data is stored and secured, how the data will be used, when the data will be deleted, etc.).

Key Questions to Consider:

- Does this platform explain its data practices in clear and everyday language that the average user could read and understand? Does this platform offer a feature for users to “opt-out” of data collection?

- Has this platform received any recognition of alignment with safe and ethical standards for data privacy? (i.e., Common Sense Privacy from Common Sense Media, Product Certifications from Digital Promise, or TrustEd Apps Certified Data Privacy from 1EdTech)?

- What are the platform’s age restrictions?

- Has this platform been developed or built using artificial intelligence technologies or systems that still need to provide evidence of their compliance with FERPA or COPPA? (For example, LLMs that are intended for individuals aged 18+ may not comply with FERPA or COPPA.)

What Can You Do to Monitor for Data Privacy?

- Find and review the platform’s Privacy Policy and/or Terms of Use.

- Look for an explanation of the platform’s data privacy practices, especially related to children’s privacy.

- If you have any questions or concerns, reach out to your school or district technology team to help determine if the data privacy practices align with your school or district’s guidelines.

A: Assess for Accuracy

On a reliable basis, the output generated by the AI tool is correct and complete.

Key Questions to Consider:

- What data or information sources have been used to train the AI tool? How current are the data or information sources?

- How frequently does the platform produce a machine error?

- What is the process or option for correcting, reporting or providing feedback on the platform’s machine error? When prompted, can the AI correct its mistakes?

- Does the AI output provide citations or references to its source/s of information?

- Is the information generated by the AI tool inclusive and representative of the full subject matter, perspectives or people?

What Can You Do to Assess for Accuracy?

- Experiment with the platform using topics or subject matter where you have a depth of knowledge or understanding.

- Review the generated output to determine if the information provided is accurate and includes diverse perspectives or viewpoints.

- Revise the prompt or instructions based on needs for specific grade levels or ability levels and review the output for accuracy.

- Continue to evaluate the generated output and report any machine errors through the platform’s feedback system.

Z: Zero-in on Bias

The design, development, data and training of the digital platform intentionally address unfair or prejudicial output generated by the AI tool.

Key Questions to Consider:

- What data or information sources have been used to train the AI tool?

- What is the process or option for correcting, reporting or providing feedback on the platform’s bias? When prompted, can the AI correct its bias?

- How does the platform make an effort to detect and mitigate the AI tool’s bias?

- Where is the potential for unfair or prejudicial treatment when using the AI tool with students? (for example, assessment, grading or feedback tools may evaluate students and/or predict their success differently based on their gender, race and ethnicity, geography, etc. rather than their individual performance and abilities)

- How can educators and students engage with AI to keep humans in the loop?

What Can You Do to Zero-in on Bias?

- Experiment with the platform using topics or subject matter where you have a depth of knowledge or understanding.

- Review the generated output to determine if the information provided reflects and/or is representative of gender, race and ethnicity, geography, perspectives, etc.

- If using a grading or feedback tool, regularly check the AI output to ensure fair treatment and accurate assessment of students. Support and encourage students to review the grades or feedback generated by the AI tool and provide opportunities for intervention or adjustments to be made based on humans in the loop.

- Continue to evaluate the generated output and report any machine errors through the platform’s feedback system.

E: Evaluate Value

The digital platform and AI tool serve a meaningful purpose for efficiency, instruction or learning.

Key Questions to Consider:

- What is the overall purpose or intent of the AI tool?

- Can this AI tool be leveraged to automate tasks or to improve productivity?

- How would this AI tool improve instruction and teaching strategies?

- How could students benefit from this AI tool? How could this tool help reduce barriers for student learning?

- When would it be appropriate for students to use this platform with their teacher or independently?

What Can You Do to Evaluate Value?

- Explore available digital platforms to learn more about the platform’s AI functions and features.

- Consider the AI tool’s potential application or purpose – for the educator and/or for the student.

- Select 2-3 platforms to build proficiency and develop expertise.

- Try short exercises with students that model and scaffold AI integration in the classroom.

To explore more from AI with the Friday Institute, access our Discover AI in Education resources or send an email to AIwiththeFI@ncsu.edu. To join our network of educators utilizing AI who are sharing their expertise and experiences, contribute your ideas and utilization of AI tools in education at go.ncsu.edu/ailearninglabsubmission.

- Categories: