Blueprint for an AI Bill of Rights: Key Questions for Schools and Districts

Created by the Friday Institute for Educational Innovation at NC State University College of Education, 2023.

Shared under CC BY-NC-SA 4.0

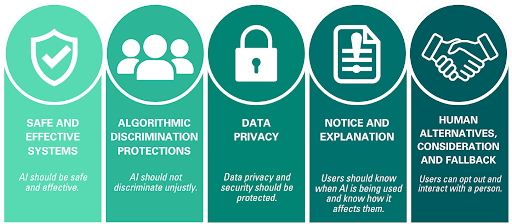

In November 2022, The White House published the Blueprint for an AI Bill of Rights: Making Automated Systems Work for the American People. The blueprint provides a framework based on five principles or protections that should be applied “with respect to all automated systems that have the potential to meaningfully impact individuals’ or communities’ exercise of rights, opportunities, or access.” From a K-12 perspective, these principles provide the fundamental building blocks for understanding and designing safe, responsible and ethical environments.

The Friday Institute for Educational Innovation at North Carolina State University has curated key questions for each principle to help educators understand its importance and considerations for their school or district. Share your own observations and experiences from a K-12 perspective at go.ncsu.edu/k12aiperspective.

Safe and Effective Systems

Key Questions for Safe and Effective Systems:

- What does it mean to have a safe and effective system for our students?

- How can we make sure that automated systems are safe for use in schools?

- How do schools decide between the benefits of AI and the need to keep students safe?

- In what situation should a person stop using AI?

- How can we prevent AI systems in schools from causing unexpected problems or dangers to students? What steps can be taken to identify and fix these issues?

- We know that it is important for people to avoid using their personal information in the wrong way with AI. How can we keep people, especially students, from sharing the wrong information?

Algorithmic Discrimination Protection

Key Questions for Algorithmic Discrimination Protection:

- How can these issues be addressed when they arise? In the classroom, school, district or even industry?

- What does it mean that AI should not discriminate unjustly? How can we protect students from algorithmic discrimination?

- We know that the use of representative and unbiased data is crucial in AI systems. What role do educators have in ensuring that AI systems are not discriminating against students?

Data Privacy

Key Questions for Data Privacy:

- What does it mean to have data privacy for students, especially in the age of AI?

- How would a school district prioritize student privacy as they look to integrate AI?

- What specific protections are already in place for protecting data in sensitive areas like health, education, counseling services, etc. How might these protections need to change with AI integration?

- Given the risks associated with continuous surveillance and monitoring, what policies could a school district have to safeguard student rights and privacy? How would a school district enforce these policies?

Notice and Explanation

Key Questions for Notice and Explanation:

- What does it mean to provide notice and explanation for AI?

- What processes can schools or districts use to communicate and remain transparent when using AI with students?

- What type of notice or explanation should a student, family or educator receive if a decision or grade was made or influenced by AI (especially if this outcome impacts their learning or employment)?

- How can schools/district students, families and educators receive clear, accessible and understandable information about the AI and automated systems being used in their school or classrooms?

Human Alternatives, Consideration, and Fallback

Key Questions for Human Alternatives, Consideration, and Fallback:

- What does it mean to offer human alternatives, consideration and fallback?

- What training do educators need to provide support and resolution when automated systems are contested or appealed by students or parents?

- How would a school or district enable students and their families to opt out of automated systems in favor of human interaction or support, particularly in situations where AI might not be the most appropriate choice?

- Can you describe the fallback processes an educator, school or district may have in place for when an automated system fails or makes an error? How can these fallback processes be accessible and equitable for all students, regardless of their background or abilities?

To explore more from AI with the Friday Institute, access our Discover AI in Education resources or send an email to AiwiththeFI@ncsu.edu. To join our network of educators utilizing AI who are sharing their expertise and experiences, contribute your ideas and utilization of AI tools in education at go.ncsu.edu/ailearninglabsubmission.

- Categories: